We are at the point where 20 years is about to elapse since the start of the TRON Project. Among all the things in the project, the spread of ITRON in particular has made progress, and it is at present the most numerously used real-time kernel for embedded systems. However, as a result of having made "weak standardization" the policy for ITRON, the specifications in each implementation have differed on fine parts, and thus there has been a problem in that the reutilization and distribution of software resources that run on top of ITRON has been difficult to realize. Accordingly, the T-Engine project, which has middleware distribution as its greatest objective, was started, and T-Kernel was developed as a new real-time kernel. It is expected that hereafter embedded system development efficiency will rise markedly as middleware that runs on T-Kernel increases.

On the other hand, as a result of long years of development, middleware that runs on top of ITRON has accumulated in large numbers. For that reason, if we can efficiently port ITRON middleware to T-Kernel, it will come about that we will be able to faster enjoy the effects of utilizing T-Kernel in the development scene. In this book, which mainly targets technicians who have heretofore carried out the development of software for utilization on ITRON, we will explain the way of thinking and the points for consideration when porting ITRON software to T-Kernel.

In Table 1, we arrange a comparison of ITRON and T-Kernel. ITRON took into consideration hardware performance from the outset at the start of the project in 1984 and made "weak standardization," which allows for optimization and subsets, a policy, so, as a result, it formed into an enclosure-type kernel business. On the other hand, T-Kernel makes the kernel program single [source] code and attempts "strong standardization," and on the basis of that has its eye on an open platform that aims at maximizing the reutilization and distribution of middleware. One could say that the two take have taken completely different approaches.

However, T-Kernel also has been developed on the basis of ITRON as a real-time kernel, and thus if one has experience of developing software on top of ITRON, one can immediately carry out development of software on top of T-Kernel. Nevertheless, in order to develop software that makes possible middleware distribution, it is necessary to understand the unique functions of T-Kernel.

|

|

||

|

|

|

|

| Basic design |

|

|

| Business aspects |

|

|

| Technical aspects |

|

|

3.1 T-Kernel Merits

T-Kernel is a compact, multitasking, real-time OS. On these points, it is the same as ITRON. Each system call API specification also is almost the same as ITRON, and thus if one is a technician who is used to ITRON, he/she can immediately develop T-Kernel application programs also.

Furthermore, as functions aimed at middleware distribution,

are supported. By utilizing these, it is possible to create middleware with high reusability/distributability.

3.2 Developing Programs on T-Kernel

In contrast to ITRON, which normally statically links the entire system and creates a program, T-Kernel supports a mechanism in which there is dynamic loading and execution for each function module. What becomes those module units are subsystems and device drivers. Of course, program development through static linking is also possible.

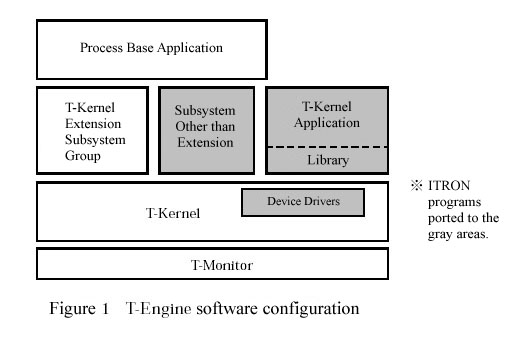

In Fig. 1., we show the software configuration in T-Engine. Within this, what can directly utilize T-Kernel system calls are device drivers, subsystems, or applications that directly run on top of T-Kernel. What is called T-Kernel Extension in the figure is a subsystem group that provides higher level system functions for still higher level applications. For example, it provides functions such as process management, virtual memory, event management, and a file system.

When we port ITRON middleware to T-Kernel, normally, it comes down to porting a program as a subsystem or device driver, or porting it as a library. In either case, it becomes a program that runs directly on top of T-Kernel and utilizes T-Kernel system calls.

On the other hand, one can conceive that there is no case in which we port ITRON middleware or applications as T-Kernel Extension programs. The reason is that because T-Kernel Extension programs are executed in user mode as processes, they cannot directly issue T-Kernel system calls.

3.3 Device Drivers

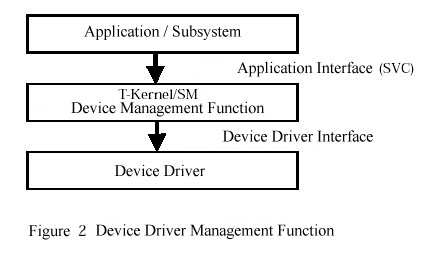

The method for embedding device drivers in the system is prescribed in the T-Kernel/SM device management function (Fig. 2). In concrete terms, an application interface that calls a driver function from a higher level program and a device driver interface that the device driver side prepares are prescribed. When developing a device driver, we create a device driver interface function and register it in the system using the tk_def_dev system call.

Device drivers can be created as independent modules, and it is also possible to dynamically carry out registering into and deleting from the system.

Furthermore, standard device driver specifications have been decided for standard devices (refer to Chapter 6).

3.4 Subsystems

A subsystem is a program that provides system expansion functions. By realizing middleware as a subsystem, it is possible to dynamically load/execute into and delete from the system.

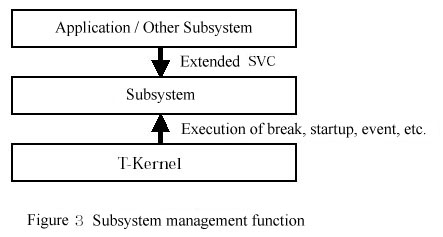

The method for embedding a subsystem into the system is prescribed in the T-Kernel/OS subsystem management function (Fig. 3). We use the Extended SVC function in calling up a subsystem function from an application.

3.5 Libraries

Libraries are not prescribed in the T-Kernel specification, but it is possible to also conceive of creating middleware as a library in the same manner as an ITRON base system up to now, and utilizing it by linking it to an application.

In the case of a library, in particular, it is necessary to pay attention to T-Format. What we call T-Format are rules for avoiding dual definitions of external symbols and the like when we link applications with multiple libraries (refer to Chapter 5).

3.6 Applications

There are applications that run directly on top of T-Kernel, and applications that run on top of T-Kernel Extension. It is also possible to run both of these simultaneously.

Application programs that run on top of T-Kernel reside in shared memory.

On the other hand, process base applications are normally placed in process space, so-called local space, where they become the objects of virtual memory. Also, it is not possible to directly utilize T-Kernel systems calls. We explain about memory space in Section 4.4.

4.1 Outline

There are several versions to the ITRON specification, but here we shall explain the system call API differences by comparing with µITRON4.0, which is the latest specification at present. In addition, we shall also explain about T-Kernel's unique functions, beginning with the MMU compatible function.

In Table 2, we show an outline of the functions both support. As mentioned previously, system calls for the most part are the same in both ITRON and T-Kernel. Basically, if we make modifications to the differences in the system call API particulars, it is possible to run an ITRON program on T-Kernel, and, moreover, it is simple. However, in making it into middleware with high reusability/distributability, it is necessary to also pay attention to T-Kernel's unique functions.

|

|

|||||

| Functions | µITRON4.0 | T-Kernel | Functions | µITRON4.0 | T-Kernel |

| Task Management Functions |

|

|

Time Management Functions

|

|

|

| Task-Dependent Synchronization Functions |

|

|

|||

| Task Exception Processing Functions |

|

|

|||

|

Synchronization and Communication Functions

|

|

|

|||

| System Status Management Functions |

|

|

|||

| Interrupt Management Functions |

|

|

|||

| Service Call Management Functions |

|

|

|||

|

Extended Synchronization and Communication Functions

|

|

|

System Configuration Management Functions |

|

|

| Subsystem Management Functions |

|

|

|||

| System Memory Management Functions |

|

|

|||

| Address Space Management Functions |

|

|

|||

|

Memory Pool Functions

|

|

|

Device Management Functions |

|

|

| I/O Port Access |

|

|

|||

| Power Saving Function |

|

|

|||

4.2 General Differences in System Call APIs

|

|

4.3 About Task Protection Levels

With T-Kernel, we specify the task protection level (the so-called execution level) at the time of task creation. In Table 3, we show standard protection level assignments. Tasks that comprise the kernel, device drivers, and T-Kernel Extension are assigned to Level 0 (TA_RNG0) as system software. Tasks that comprise applications that run directly on top of T-Kernel are assigned to Level 1. In addition, tasks that comprise middleware exclusive of Extension are also normally assigned to Level 1. And then, tasks that comprise user applications as processes are assigned to Level 3. We do not normally use Level 2.

On T-Kernel, we specify the task protection level that can call up a system call by means of the "TSVCLimit" of the system configuration information. For example, if we specify the TSVCLimit at Level 1, then it becomes impossible to utilize T-Kernel system calls from tasks of protection level 2 and protection level 3. Table 3 supposes TSVCLimit=1.

Furthermore, as for functional differences between the tasks of protection level 0 and protection level 1, there is the point concerning memory access limits (refer to the next section), and the point that the task exception handler function cannot be used in the tasks of protection level 0.

The protection level at the time of Extended SVC execution becomes Level 0. At that time, the protection level prior to Extended SVC execution is preserved as access privilege information. Access privilege information is referred to when a check is made at the SVC side as to whether a task that is the origin of a service call isn't requesting illegal memory access.

|

|

|

|

|

|

| Level 0 | System software (OS, device drivers) |

| Level 1 | System applications |

| Level 2 | Reserved |

| Level 3 | User applications (processes) |

4.4 About the MMU Compatibility Function

In T-Kernel, we carry out memory management utilizing an MMU, and we provide mechanisms for task space/shared space and memory protection. Actually, these functions are realized in combination with T-Kernel Extension.

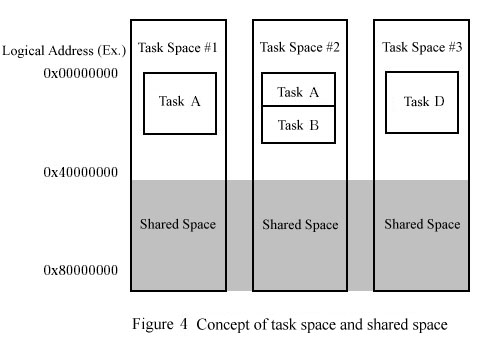

In Fig. 4, we show an image of task space and shared space. In contrast to the same memory contents always existing in shared memory, only the memory contents of local space (so-called process space) that are selected at that time point can exist in and access task space. In order to make programs into software with high distributability, it is necessary to well understand this concept prior to programming. For example, when accessing a memory region of task space, it is necessary to make it so that the desired task space is selected at that time point. We can switch task space by means of the tk_set_tsp system call.

Furthermore, when Extension supports virtual memory, it is necessary to be cautious also as to whether memory is always-on/non-resident. For example, in contrast to a memory region that a process has secured, when we carry out access from DMA or an interrupt handler, it is necessary for the memory region to have been made resident. To make it resident, we utilize a function such as LockSpace of T-Kernel/SM.

A protect level is set in memory regions. In concrete terms, we specify the protect level with parameters in the tk_cre_mpf and tk_cre_mpl system calls, which we use at the time of memory pool creation, and in the tk_get_smb system call, which we use at the time of system memory assignment. Each task cannot access memory regions of a higher protect level than its own protect level. By specifying an appropriate protect level for each task and memory level, it is possible to construct a solid system in terms of security.

We arrange in Table 4 the MMU-related functions. There are things concerning modifying the task space, checking memory region access privileges, and conversion between logical address-memory address. In cases where we provide services to the requests of process base applications, it is necessary to carry out appropriate processing by utilizing these system calls.

Although it's digressing, with T-Kernel, which is formed on a specification that assumes four levels as protect levels, there are also cases in which there are only two levels: a privileged mode and a user mode. In this case, we assign levels 0~2 to the privileged mode, and level 3 to the user mode.

Also, on systems in which an MMU is not loaded or not used, we do the following. First, handle protect levels 0~3 all identically. We consider all programs as running in physical addresses, and task space not existing. And then, the memory region access privilege check function always returns E_OK. If we do things in this manner, it comes about that programs written assuming a system utilizing an MMU can also run and be utilized on system not loaded with an MMU. Conversely, programs that assume nothing other than a system not loaded with an MMU cannot be utilized on on a system that utilizes an MMU, and they end up becoming software with low distributability.

|

|

|

| T-Kernel/OS Task Management Functions | |

|

Referencing of task space |

|

Setting of task space |

| T-Kernel/SM Address Space Management Functions | |

|

Address space setting |

|

Address space check (read access privilege) |

|

Address space check (read/write access privilege) |

|

Address space check (read/execute access privilege) |

|

Make address space resident |

|

Release resident address space |

|

Acquisition of physical address |

|

Mapping to logical address of physical address |

|

Open up logical space |

4.5 About Subsystem Management Functions

In T-Kernel, Extended SVC handlers are not separate, rather they are defined as a part of subsystems. We define subsystems with the tk_def_ssy system call. In Table 5, we show the specification parameters at the time of subsystem definition. In order to for a subsystem to run cooperatively with the entire system, it is made up in a manner in which we define its startup function, break function, and so on.

|

|

|

|

|

|

|

Subsystem ID |

|

Subsystem definition information |

Details of pk_dssy |

|

ssyatr |

Subsystem attributes |

ssypri |

Subsystem priority level |

svchdr |

Extended SVC handler address |

breakfn |

Break function |

startupfn |

Startup function |

cleanupfn |

Cleanup function |

eventfn |

Event process function |

resblksz |

Resource management block size |

4.6 About Device Management Functions

These are prescribed in the T-Kernel/SM specification. We register device drivers with the tk_def_dev system call. We show in Table 6 the specification parameters at the time we register the device driver. In T-Kernel, the APIs through which an application carries out input/output requests to a device driver and waits for completion are determined as system calls, and one must describe the interface functions (execfn function and waitfn function, etc.) of Table 6 so as to satisfy them.

|

|

|

|

|

|

|

Physical device name |

|

Device registration information |

idev: |

Returns device initialization information |

Details of ddev |

|

drvatr |

Driver attributes |

devatr |

Device attributes |

nsub |

Subunit number |

blksz |

Native data block size |

openfn |

Open function |

closefn |

Close function |

execfn |

Start processing function |

waitfn |

Wait completion function |

abortfn |

Stop processing function |

eventfn |

Event function |

T-Format is a collection of rules for the purpose of making it so that one can build system by combining middleware that multiple vendors provide. Mainly, it has set up the naming rules of global symbols, such as files formats and global variable/global function names.

Concerning global symbol names, for the purpose of avoiding dual definitions of the same symbol name, each vendor makes a request to the T-Engine Forum, and by using the vendor code it acquires, it guarantees uniqueness by attaching that symbol name. For example, the vendor code of the YRP Ubiquitous Networking Laboratory is made up as "unl," and, as for the symbol names in those cases, they become, for example, as follows:

| File names | unl_mpeg2_decode.c, libunl_mpeg2.a |

| Global function names | void unl_mpeg2_decode ( . . . ); |

| Global variable names | char unl_mpeg2_id[4]; |

| Constant macro | #define UNL_MPEG2_CNST2 2 |

With software that runs on top of T-Kernel, in particular library format software, there is a necessity for it to be in conformance with T-Format. For the details of the T-Format specification, one should refer to the T-Format Specification. At the present point in time, this has been released on pages aimed at the members of the T-Engine Forum.

In the T-Engine Forum, we have decided on device driver standard specifications for main devices. In Table 7, we show the devices for which we have finished the settling of standard specifications up to now.

If one develops a device driver together with this standard specification, then it will come about that the middleware developed utilizing that standard specification will run immediately. Conversely, if one utilizes a device driver standard specification when developing middleware that utilizes a device, it will come about that it will be compatible with several boards all at once. At the present point in time, the Standard Device Driver Specification has been released on pages aimed at the members of the T-Engine Forum.

|

|

|

7.1 Level of Porting

It is possible to conceive of multiple levels in terms of the level of finish fit in porting.

(1) A level at which we have only modified the system call APIs into those of T-Kernel (2) In addition to (1) above, a level at which we have converted software into subsystems and device drivers based on T-Kernel specifications, and carried out compatibility with T-Format (3) In addition to (1) and (2) above, a level at which we have made it so that the software operates even in an environment in which an MMU is utilized

Level (1) is a level that can be applied if we are just going to construct on top of T-Kernel a system that used ITRON up to now. However, when we consider the reusability and distributability of software resources, we recommend carrying out everything up to levels (2) and (3) as a porting guide.

From here on, we shall explain points that you should pay attention to concerning each level.

7.2 Modification of System Call APIs

If one pays attention to the items explained in Section 4.2 and makes modifications, then everything will be fine. Because both ITRON and T-Kernel support the same system call APIs, one can almost match them with mechanical modifications.

However, when you to try a port on the fly, you will probably be puzzled by the parameters at the time of task creation, in particular what will be acceptable to specify for logical address IDs and resource IDs. We will give an explanation of this in Section 7.5.

7.3 Converting into Subsystems and Device Drivers, and T-Format Compatibility Modifications

As we explained in Chapter 3, middleware on T-Kernel is created as either a subsystem, a device driver, or a library. If we carry out conversion into subsystems and device drivers, dynamic loading and unloading as independent modules becomes possible, and system development efficiency and middleware distributability rise. As to the method for implementing subsystems and device drivers, a separate working group on middleware distribution in the T-Engine Forum is deciding on the guidelines.

When we convert into subsystems, entry routines that call up subsystem Extended SVCs from the application are linked to the application side. Accordingly, it is necessary to make the names of entry functions into symbol names that are in accordance with T-Format. Furthermore, by using the tools in a T-Engine development kit, an Extended SVC call up routine can be automatically created from the header file of the function definition.

On the other hand, when we create software as libraries, it is necessary to name all the external symbols in accordance with T-Format.

7.4 Modifications Aimed at an Environment that Utilizes an MMU

In order to make programs into middleware that can be utilized even on systems that use an MMU, it is necessary to make them compatible with process management and virtual memory systems. In this book, although we omit a detailed explanation, in accordance with need, we carry out the switching of task space and resource IDs that belong to tasks, plus processing to make memory regions resident memory. In addition, we conduct access privilege checks as to whether an illegal memory access is being requested from a higher level application.

Even when we create programs as libraries, the same operations are necessary when we carry out services by generating tasks among them.

7.5 Concrete Example of Program Modification

We show a porting example from ITRON to T-Kernel. This is something we give as a reference to our readers; it is not necessarily something we are saying you must follow.

[1] Task creation program example

| Program on ITRON |

|

| Program on T-Kernel |

|

Explanation

[Point 1] T-Format

We have named the external symbol in accordance with T-Format.

[Point 2] Attribute specification

We have specified each attribute in the following manner,

A program on ITRON that becomes the target for porting runs directly on top of T-Kernel (it does not run as a process on top of T-Kernel Extension). Therefore, because it runs only in shared space, we do not specify TA_TASKSPACE, since initially task space is fine as undecided). Also, because this is not a task that constitutes a process, we do not specify TA_RESID, since it comes to belong to the system resources. Furthermore, because we do not specify TA_TASKSPACE and TA_RESID as attributes, it's fine not to set the uatb, lsid, and resid of the parameters (T_CTSK structure), as they are ignored.

As for the task protect level, as we explain in Section 4.3, it is decided by "TSVCLimit" whether T-Kernel system calls can be issued, and, also, we have assumed the assignment divisions shown in Table 3. In accordance with this, it is fine to make device drivers and tasks that constitute core software of the system TA_RNG0, and tasks that constitute middleware that is close to applications TA_RNG1. Furthermore, when we make something TA_RNG0, it is not possible to utilize task exception processing functions.

[Point 3] Automatic assignment of object IDs

ID numbers return as the return value.

[2] Fixed length memory pool creation program example

| Program on top of ITRON |

|

| Program on top of T-Kernel |

|

Explanation

[Point 1] Differences in the detailed specification

On T-Kernel we cannot create a memory region by specifying it.

[Point 2] Attribute specification

In a case where we make it so that we do not receive the influence of the tk_dis_wai system call, we specify the TA_NODISWAI attribute. This is not a limit in cases where we implement break processing in subsystems.

In cases where we access regions also from tasks of protect level 1, we specify TA_RNG1.We make them TA_RNG0 in cases where we access only from tasks from protect level 0.

[Point 3] Memory regions

The memory regions that are protected here becomes resident memory in share space.

When people develop programs on top of ITRON, it seems like there are probably a lot of instances where they use an original compiler provided by microcomputer vendor or a development environment vendor and an integrated development environment on top of Windows.

In the case of T-Kernel also, original tools are are being provided from various vendors, but from the recognition that there is a need to to fix a reference for development tools for the purpose of realizing middleware distribution, we have made GNU tools, which support many CPU types, the standard. Accordingly, developers who have not been familiar with GNU tools up to now might be a little perplexed initially. Also, at the present point in time, the development host that is used as the standard has become the Linux PC. It may take a little time for developers who have utilized the Windows environment to get used to editor and file operations.

In this area, various development environments for T-Engine will probably come to be commercialized and provided hereafter.

As a software migration guide from ITRON to T-Kernel, we have brought together items to pay attention to when porting, centering on their mutual points of difference. We will be fortunate if it serves as an aid to understanding the creation of of software with high distributability.

____________________

A. List of System Call Correspondences between µITRON4.0 and T-Kernel (created with the cooperation of NEC Electronics Corporation)

B. Migration Report: Mitsubishi Electric Corporation, Renesas Solutions Corporation

C. Migration Report: Toshiba Information Systems (Japan) Corporation

____________________

The present list, assuming a case in which one is porting to T-Kernel applications that have utilized a µITRON4.0-based real-time OS, shows corresponding system calls. Accordingly, T-Kernel's original system calls are not included in the table, leaving aside system calls that will probably become necessary when porting.

Furthermore, there are also items that are implementation dependent in each real-time OS, and thus because there are also cases where something has not necessarily been made into a correspondence in accordance with the present list, please reference this list with an understanding of that principle in mind.

|

|

|

|

|

| Task Management Functions | dly_tsk | tk_dly_tsk | |

| cre_tsk |

|

Task Exception Processing | |

| acre_tsk | tk_cre_tsk | def_tex | tk_def_tex |

| del_tsk | tk_del_tsk | ras_tex | tk_ras_tex |

| act_tsk | x | iras_tex | tk_ras_tex |

| iact_tsk | x | dis_tex | tk_dis_tex |

| can_act | x | ena_tex | tk_ena_tex |

| sta_tsk | tk_sta_tsk | - | tk_end_tex (handler termination time) |

| ext_tsk | tk_ext_tsk | sns_tex | tk_ref_tsk |

| exd_tsk | tk_exd_tsk | ref_tex | tk_ref_tsk |

| ter_tsk | tk_ter_tsk | Synchronization and Communications Functions | |

| chg_pri | tk_chg_pri | Semaphore | |

| get_pri | tk_ref_tsk | cre_sem | tk_cre_sem (can't specify ID) |

| ref_tsk | tk_ref_tsk | acre_sem | tk_cre_sem |

| ref_tst | tk_ref_tsk | del_sem | tk_del_sem |

| Task Attribute Synchronization | sig_sem | tk_sig_sem | |

| slp_tsk | tk_slp_tsk (tmout=-1) | isig_sem | tk_sig_sem |

| tslp_tsk | tk_slp_tsk (tmout) | wai_sem | tk_wai_sem (tmout=-1) |

| wup_tsk | tk_wup_tsk | pol_sem | tk_wai_sem (tmout=0) |

| iwup_tsk | tk_wup_tsk | twai_sem | tk_wai_sem (tmout) |

| can_wup | tk_can_wup | ref_sem | tk_ref_sem |

| rel_wai | tk_rel_wai | Event Flag | |

| irel_wai | tk_rel_wai | cre_flg | tk_cre_flg (can't specify ID) |

| sus_tsk | tk_sus_tsk | acre_flg | tk_cre_flg |

| rsm_tsk | tk_rsm_tsk | del_flg | tk_del_flg |

| frsm_tsk | tk_frsm_tsk | set_flg | tk_set_flg |

|

|

|

|

|

| iset_flg | tk_set_flg | loc_mtx | tk_loc_mtx (tmout=-1) |

| clr_flg | tk_clr_flg | ploc_mtx | tk_loc_mtx (tmout=0) |

| wai_flg | tk_wai_flg (tmout=-1) | tloc_mtx | tk_loc_mtx (tmout) |

| pol_flg | tk_wai_flg (tmout=0) | unl_mtx | tk_unl_mtx |

| twai_flg | tk_wai_flg (tmout) | ref_mtx | tk_ref_mtx |

| ref_flg | tk_ref_flg | Message Buffer | |

| Data Queue | cre_mbf | tk_cre_mbf (can't specify ID) | |

| cre_dtq | x | acre_mbf | tk_cre_mbf |

| acre_dtq | x | del_mbf | tk_del_mbf |

| del_dtq | x | snd_mbf | tk_snd_mbf (tmout=-1) |

| snd_dtq | x | psnd_mbf | tk_snd_mbf (tmout=0) |

| psnd_dtq | x | tsnd_mbf | tk_snd_mbf (tmout) |

| ipsnd_dtq | x | rcv_mbf | tk_rcv_mbf (tmout=-1) |

| tsnd_dtq | x | prcv_mbf | tk_rcv_mbf (tmout=0) |

| fsnd_dtq | x | trcv_mbf | tk_rcv_mbf (tmout) |

| ifsnd_dtq | x | ref_mbf | tk_ref_mbf |

| rcv_dtq | x | Rendezvous | |

| prcv_dtq | x | cre_por | tk_cre_por (can't specify ID) |

| trcv_dtq | x | acre_por | tk_cre_por |

| ref_dtq | x | del_por | tk_del_por |

| Mailbox | cal_por | tk_cal_por (tmout=-1) | |

| cre_mbx | tk_cre_mbx (can't specify ID) | tcal_por | tk_cal_por (tmout) |

| acre_mbx | tk_cre_mbx | acp_por | tk_acp_por (tmout=-1) |

| del_mbx | tk_del_mbx | pacp_por | tk_acp_por (tmout=0) |

| snd_mbx | tk_snd_mbx | tacp_por | tk_acp_por (tmout) |

| rcv_mbx | tk_rcv_mbx (tmout=-1) | fwd_por | tk_fwd_por |

| prcv_mbx | tk_rcv_mbx (tmout=0) | rpl_por | tk_rpl_rdv |

| trcv_mbx | tk_rcv_mbx (tmout) | ref_por | tk_ref_por |

| ref_mbx | tk_ref_mbx | ref_rdv | x |

| Extended Synchronization and Communications | Memory Pool Management | ||

| Mutex | Fixed Length Memory Pool | ||

| cre_mtx | tk_cre_mtx (can't specify ID) | cre_mpf | tk_cre_mpf (can't specify ID) |

| acre_mtx | tk_cre_mtx | acre_mpf | tk_cre_mpf |

| del_mtx | tk_cre_mtx | del_mpf | tk_del_mpf |

|

|

|

|

|

| get_mpf | tk_get_mpf (tmout=-1) | stp_ovr | x |

| pget_mpf | tk_get_mpf (tmout=0) | ref_ovr | x |

| tget_mpf | tk_get_mpf (tmout) | System Status Management | |

| rel_mpf | tk_rel_mpf | rot_rdq | tk_rot_rdq |

| ref_mpf | tk_ref_mpf | irot_rdq | tk_rot_rdq |

| Variable Length Memory Pool | get_tid | tk_get_tid | |

| cre_mpl | tk_cre_mpl (can't specify ID) | iget_tid | tk_get_tid |

| acre_mpl | tk_cre_mpl | loc_cpu | (DI plus tk_dis_dsp) |

| del_mpl | tk_del_mpl | iloc_cpu | x |

| get_mpl | tk_get_mpl (tmout=-1) | unl_cpu | (EI plus tk_ena_dsp) |

| pget_mpl | tk_get_mpl (tmout=0) | iunl_cpu | x |

| tget_mpl | tk_get_mpl (tmout) | dis_dsp | tk_dis_dsp |

| rel_mpl | tk_rel_mpl | ena_dsp | tk_ena_dsp |

| ref_mpl | tk_ref_mpl | sns_ctx | tk_ref_sys |

| Time Management Function | sns_loc | (isDI plus tk_ref_sys) | |

| System Time | sns_dsp | tk_ref_sys | |

| set_tim | tk_set_tim | sns_dpn | tk_ref_sys |

| get_tim | tk_get_tim | ref_sys | tk_ref_sys |

| isig_tim | x | Interrupt Management | |

| Cyclic Handler | def_inh | tk_def_int | |

| cre_cyc | tk_cre_cyc (can't specify ID) | cre_isr | x |

| acre_cyc | tk_cre_cyc | acre_isr | x |

| del_cyc | tk_del_cyc | del_isr | x |

| sta_cyc | tk_sta_cyc | ref_isr | x |

| stp_cyc | tk_stp_cyc | dis_int | DisableInt |

| ref_cyc | tk_ref_cyc | ena_int | EnableInt |

| Alarm Handler | chg_ixx | x | |

| cre_alm | tk_cre_alm (can't specify ID) | get_ixx | x |

| acre_alm | tk_cre_alm | Service Call Management | |

| del_alm | tk_del_alm | def_svc | Defined at time of subsystem registration |

| sta_alm | tk_sta_alm | cal_svc | x |

| stp_alm | tk_stp_alm | System Configuration Management | |

| ref_alm | tk_ref_alm | def_exc | x |

| Overrun Handler | ref_cfg | tk_get_cfn | |

| def_ovr | x | ref_ver | tk_ref_ver |

| sta_ovr | x | ||

We have heretofore developed a TCP/IP function for use with µITRON. On this occasion, we have ported this TCP/IP function as a subsystem in the form of standard middleware for T-Engine use, and, as we have verified the portability between the two OSs, we will report on this below.

We show in Table 1 the origins of the base software of the present development and the development target on this occasion.

|

|

||

|

|

|

|

| Hardware |

|

|

| OS |

|

|

| TCP/IP function |

|

|

| LAN driver |

|

|

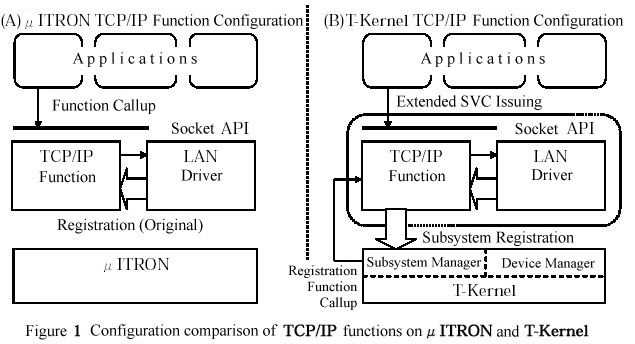

We show in Fig. 1 a configuration comparison of the TCP/IP function on top of µITRON and T-Kernel. In the µITRON of (A) in the figure, the TCP/IP function is a simple library, and we use BSD socket APIs as an application that utilizes this function. On top of µITRON, the system in its entirety forms one execution module. On the other hand, on top of the T-Kernel of (B) in the figure, it is necessary to follow the external interface provisions and code name rules in order to guarantee middleware distributability. From the viewpoint of guaranteeing middleware distributability, subsystems possess the following characteristics.

4.1 List of Items to Be Ported

We show in Table 2 a list of items to be ported in order to make a subsystem out of the TCP/IP function for µITRON use. Furthermore, in the present implementation, the LAN driver constitutes a part of the TCP/IP function subsystem.

|

|

||

|

|

|

|

| (1) | Modification of the APIs of the kernel used | Modify the APIs of the kernel used, and the header file names |

| (2) | Assignment of a subsystem ID | Assign a unique ID, and set it at the time of subsystem registration |

| (3) | Assignment of a subsystem priority level | Assign an appropriate priority level, and set it at the time of subsystem registration |

| (4) | Definition of the resource group | Set what resource group the subsystem belongs to |

| (5) | Definition of the resource management block | In order not to define a new resource group, the resource management block is unsecured |

| (6) | Description of the interface definition file of the extended SVC | Describe the socket API definition file in order to automatically create a socket API interface library source |

| (7) | Definition of the extended SVC handler | Create an extended SVC handler for the purpose of calling up each processing function of the socket API |

| (8) | Definition of the main function | Define the main function of the subsystem, and describe the initialization processing |

| (9) | Definition of the four service routines for the kernel | Among the four functions, startup, cleanup, event, break, create a break for use in releasing the TCP listen/accept |

| (10) | T-Format compatibility | Add a unique subsystem prefix to the C language global symbol names and each type of file name |

We show below the concrete porting details of each item mentioned above.

(1) Modification of the APIs of the Kernel Used

When we substituted from the APIs of the µITRON3.0 Specification to the APIs of the T-Kernel Specification, we carried out the modification of the T-Kernel header file name (tkernel.h). In the present implementation, we carried out substitution in a total of 36 places in regard to the call up portions of 15 types of kernel APIs. We show in Table 3 the classification of the individual modification details of the APIs of the kernel used.

|

|

|

| Modification Details of the APIs of the Kernel Used | Number of Corresponding Kernel APIs |

| Modification of API name only | 6 |

| Modification of API names and arguments | 7 |

| Modification of API arguments only | 1 |

| Modification of API used (by consolidation of corresponding API) | 1 |

| Total | 15 |

(2) Assignment of Subsystem ID

As for a subsystem ID, 1 through 9 are reserved for T-Kernel use, and thus it is possible to use 10 and below. In the present implementation, we temporarily assigned 50 as the subsystem ID of the TCP/IP function.

(3) Assignment of Subsystem Priority Level

The subsystem priority level is something that determines the execution order of the four service routines (see [9] below) when multiple subsystems exist, and it is necessary to make settings based on the dependency relations among the subsystems. In the present implementation, we temporarily assigned 16 as the subsystem priority level of the TCP/IP function.

(4) Definition of the Resource Group

Resource groups are group definitions centered on jointly utilized memory resources, and it is necessary for tasks on top of T-Kernel to definitely belong to one or the other resource group at creation time. In the present implementation, definition of a new resource group was not carried out, rather we made it so that the IP reception processing task of the TCP/IP function belongs to the default system resource group.

(5) Definition of the Resource Management Block

Resource management blocks are memory resources that secure/manage each resource group, and it is possible to define the purpose for which it is to be used and the size to be acquired in accordance with need. In the present implementation, definition of a new resource group was not carried out, and even the resource management block for TCP/IP function use has not particularly been secured.

(6) Description of the Interface File Definition of the Extended SVC

On top of T-Kernel, subsystems and applications respectively form individual execution modules. Accordingly, it is necessary for applications that utilize BSD socket APIs to link a TCP/IP subsystem interface library. Personal Media Corporation's software development environment for T-Kernel provides a tool (the mkiflib command) that automatically creates the ASM source code of this interface library. In the present implementation, we have described a BSD socket API interface definition header file for the purpose of utilizing this tool. We are omitting the particulars of the method for describing this file; however, outside of a function prototype declaration for each BSD socket API, we describe related header file names, subsystem IDs, etc., that are automatically created by means of this tool.

(7) Definition of the Extended SVC Handler

When an application calls up the BSD socket APIs, a single extended SVC handler is started up on the TCP/IP subsystem side. Inside the present handler, we do location separation with a function number that is specified with an argument, and then we execute processing functions that correspond to each API. In the present implementation, we have defined an extended SVC handler that calls up processing functions that correspond to the BSD socket APIs.

(8) Definition of the Main Function

It is necessary to define for a subsystem a main function that is its respective entry point. The present function is called up at the time we load and unload the subsystem, and at the time of loading, we execute the initialization function of the present function, and we carry out processing that registers it with the T-Kernel Subsystem Manager. In the present implementation, we have defined the execution of the initialization function of the TCP/IP function and the LAN driver, and TCP/IP registration processing.

(9) Definition of Four Service Routines for the Kernel

By preparing, in accordance with need, four registration functions (startup, cleanup, event, and break) from T-Kernel at their startup and termination time plus during execution, it is possible for subsystems to carry out acquisition and release of each resource in use, individual processing in response to external events, and process interruption when task exceptions occur. In the present implementation, we have defined a break function for the purpose of releasing TCP listen/accept at the times of task exception occurrence (the others are undefined).

(10) T-Format Compatibility

In order to guarantee the distributability of T-Kernel middleware, we prescribe various types of code formats with "T-Format." In concrete terms, this is made up from a style format for source code, a standard binary format, and a standard document format. In the present implementation, we follow the naming rules of C language global symbol names plus various types of file names in regard to style format for source code, and we carried out modifications in which we respectively assigned the middleware name "renesas_tcp_ip" (Renesas' registered vendor code "renesas" and the present subsystem function name "tcpip"). Furthermore, in order to make it possible to also utilize the conventional BSD socket API name, we have added a header file for use in common name definitions.

In the present report, we have described the details of modifications when we ported a TCP/IP function for µITRON use as a T-Kernel subsystem. The development for porting on this occasion, from the exposing of the porting items to confirmation of normal operation, was completed in a period of about six weeks. During that period, there were no points that became particular problems, and we obtained the conclusion that portability from µITRON to T-Kernel was good.

Middleware Porting Guide from ITRON to T-Kernel

An Approach Based on an OS Wrapper Function

You want to upgrade a present system as a next generation product! How is T-Engine as a new platform? It looks like the CPU used in the product will have a small impact on the software even if it is changed, and the development platform is easy to get your hands on . . .

Well then, the next platform is T-Engine, isn't it. Let's port the current system to T-Engine.

But, about how much is required in the way of expenses and time?

* * * * * * * * * * * * * * * * * * * * *

I want to adopt T-Engine as a development and product platform, and I want to port the entire system, including drivers and middleware, to T-Kernel . . . that type of demand actually existed.

What type of response would you make to this demand?

We have been engaged in the development, sales, porting, etc., of embedded drivers and middleware as packages for about the last 10 years. While we hold large software resources, on top of studying what you can't do with the T-Engine platform, there was also a time when the integrity of our firm's µITRON-specification-based operating system UDEOS (µITRON3/4) and T-Kernel had become an issue.

By comparing the T-Kernel specification and UDEOS specifications, and, further, by holding discussions with various parties responsible for drivers and middleware, we roughly learned of the necessary work. Even for the necessary expenses and time (hereafter, the costs), the proper numbers can almost be shown.

However, this is the story in the case that each party responsible for drivers and middleware applications did the work. In a case when a party in charge wants to port and evaluate a certain system in its entirety in a short period of time, won't there also be those who have to carry out the work while not losing their grasp on the current software structure and processing?

Can't we simply create something like a migration procedure manual and apply it in a stroke to all software?

When actually entrusted with on the spot porting work, on how much of a base can that party in charge proceed? In what areas will they have trouble? There are a lot of things they will actually want to experience.

By taking a like amount of time, and by means of our firm's lucky (?) investment, we came to provide a T-Engine development environment. Thereupon, we tried an experiment in which we carried out driver/middleware porting and simple application development.

First, as software targets for porting, we selected our firm's NetNucleus, which is a TCP/IP protocol stack, and NetNucleus-wLAN, which is a wireless LAN driver. So that the scope oft he porting would not be too broad, we left out IPv6, IPSec, etc., and narrowed the targets to only those functions that are sufficient for carrying out proper communications.

Also, so that processing would be simple even for the development for a demo application, we made it so that we only repeat the operation in which we display on the LCD image data received via wireless LAN from a PC.

With the above mentioned configuration, because communications protocols are included, the program comes to run by using the following resources. Because the dispatch of tasks and handlers and synchronous communication occur at frequent intervals, it seems like it would be good in checking portability to different platforms.

|

19 interfaces |

|

3 resources |

After deciding this far, we brought in a person to be in charge who was completely in the dark about the circumstances. Then, we handed over the T-Engine package and the middleware that was the target of the port, and we relayed only the objective saying, "we want to create this type of application and display it at an exhibition."

The point here is not that "we were not telling the person we put in charge the prior survey results." We got him to do his best with only existing documentation.

If we bring together the the software that was the target of the port and the environment, we get the following.

|

|

|

|

|

|

| Port target software |

|

| Development environment |

|

| Things handed over |

|

| Objective relayed |

|

| Target technician |

|

In the beginning, we had him get a feeling for the source of the sample programs and applications while looking at the documentation bundled with T-Engine, and then we had him do an investigation of the development environment.

By using discretion around the time we asked, "have you gradually gotten a feel for it?" we issued instructions to begin the port. At the point in time when the NetNucleus sample program ran, we instructed him to develop the demo application, and, finally, we instructed him to raise the level of finish to the degree that it would run continuously for about a whole day at an exhibition. Outside of that, there was almost no intervention on our part.

We did a pitiful thing to the person we put in charge, but various good data were obtained.

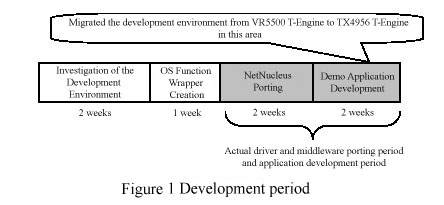

First, the development period was roughly as follows:

The details of the work details and the level of finish for each process was as follows:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Among the processes (exclusive of the process equivalent to a PC Card enabler) that could not be made compatible simply with an OS function wrapper, there were the following items.

| (1) | Successive Resource ID Guarantee |

|

|

| (2) | Interrupt Buffer and Context Differences |

|

|

| (3) | Double Definitions |

|

|

| (4) | Other Configurations |

|

As for the above mentioned revisions, because the problems are clear for whichever one, if the very methods for coping are made clear, then it seems like they won't become that much of a obstacle to migration work.

The migration from VR5500 T-Engine to TX4956 T-Engine was almost completed only with the compiler.

As for our impressions at the point in time when we completed the experiment, first is that "the necessary period was shorter than we thought."

We think that was due to the fact that the compatibility of UDEOS and T-Kernel was very high. There was not a context problem with permission issuance, either. (Of course, there is also the fact that the T-Engine development environment was something that was easy to understand.)

What took time beyond our estimates were the analysis of problems that occurred as a result of compiler optimization, and timing adjustments due to environment dependencies.

For a technician who knew nothing of the details working alone up to the exhibition demo application "running for the time being" was approximately 1.5 months, but from the achievement of a little more than three weeks when we exclude the time for investigating the environment and creating the OS function wrapper, we can assume that porting of other drivers and middleware probably won't require that much labor, either.

It seem like we can also say that the portability among different T-Engines is sufficiently high. This also is something we attempted to experiment with; when the same person in charge ported the Macromedia Flash Player that runs on top of TX4956 T-Engine to the ARM version of T-Engine, it ran almost just by compiling, if we exclude the processing that directly controls the sound and graphic controllers, and revisions concerning arrangement in double variable memory.

When we port other middleware and applications, we believe that processes that cannot be coped with by means of the OS wrapper we created on this occasion will again be discovered, but we plan to make changes in order at that time.

By implementing and correcting on top of T-Kernel, compared to the implementation on µITRON, there were parts where the overhead for the code becomes great.

In regard to these spots, because we actually ran them and made actual measurements in real-time on the order of microseconds, we plan to use them in performance estimates and the like.

Finally, this is a personal impression of viewing the results of the experiment on this occasion, but if you are going to share software resources on µITRON and T-Kernel, I think that coping by means of an OS wrapper also wouldn't be bad.

However, in the case of a severe system in regard to CPU resources, thinking up some specialized processes might be better.

End of the present report. The following is made up of attachments that combine as the detailed report.

____________________

In this section, we have collected together the details that caught our attention at the time we created the UDEOS/r39 OS function (73 functions) wrapper.

| Replaced with #define macros | 55 functions |

| Replaced with functions | 14 functions |

| Function that could not be realized with equivalent processes | 4 functions |

The following details are items that could not be avoided by replacement based on simple wrapper functions.

(1) Fixed IDs

At the time of cyclic handler and alarm handler registration, in UDEOS we have to specify an ID number, but T-Kernel has automatic assignment.

In the implementation on this occasion, because we have made an implementation in which we store the ID numbers assigned by T-Kernel in variables passed as arguments, code that uses constants as ID numbers does not run normally in the wrapper created on this occasion.

(2) TCY_INI (Reset of Startup Timing)

The act_cyc (cyclic handler control) function of UDEOS can be realized with the tk_sta_cyc (cyclic handler start) and tk_stp_cyc (cyclic handler stop) in T-Kernel, but at that time it is not possible to realize the function of specifiable attribute TCY_INI in act_cyc.

(3) Specification of Absolute Time

In the UDEOS timer handler, the specification of absolute time and relative time can be selected. In T-Kernel, there is only relative time.

(4) Timer Handler Startup Time

In the UDEOS timer handler, we specify the startup time with a 48[bit] integer, but in T-Kernel we specify with a 32[bit] integer.

(5) System Time Setting and Referencing

As for the shape of the structure used in the system call that sets and references the system time, in contrast to UDEOS being 16+32[bit](H+UW), in T-Kernel it is made up as 32+32[bit](W+UW).

(6) Transmission Order of the Message Buffer

In contrast to UDEOS, where we transmit from the message buffer where transmission is possible, in T-Kernel, it is either FIFO or task priority level order.

(7) Differences in the Shape of Structure Members

As for the structure that hands over to the argument of the status reference type system call ref_***, there are cases in which the type name and member are the same (or the members greater on the T-Kernel side), but the member type is different.

For example, we handle the presence or absence of a queue with bool in UDEOS and int in T-Kernel. That it is int in T-Kernel is in order to store its beginning ID number at the time there is a queue.

(8) Differences Based on Original Implementation

As for the ref_flg (reference event flag status) of UDEOS, among the members of the argument structure T_RFLG, flgatr (event flag attributes) does not exist in T-Kernel. This member is an original implementation of UDEOS.

(9) Definition of Maximum Values

According to T-Kernel documentation, the maximum value of a wake up request queuing number is implementation dependent, and there is also no definition based on macros. (In normal code, this does not lead to a problem in order to reference the return value, but in a case when there is a need to be conscious of the highest value, there is the possibility of damaging portability.)

(10) INT1 Interrupt Measures

The INT1 interrupt, which in the specification is made up not to occur when we place a load, frequently occurred. Because the problem disappeared on the surface when we registered the INT1 interrupt handler and returned without processing anything, we registered this dummy handler in the demo.

Later, there was a point where we obtained a revised version of the kernel image, and because the INT1 interrupt had come not to occur, registration of the dummy handler is not carried out at present.

____________________

In this section, we have collected together a list of correspondences of the UDEOS/r39 and T-Kernel system calls.

|

|

||

|

|

|

| sta_tsk | tk_sta_tsk | Start up task |

| ext_tsk | tk_ext_tsk | Terminate task |

| ter_tsk | tk_ter_tsk | Force terminate task |

| dis_dsp | tk_dis_dsp | Forbid dispatch |

| ena_dsp | tk_ena_dsp | Allow dispatch |

| chg_pri | tk_chg_pri | Change task priority level |

| rot_rdq | tk_rot_rdq | Rotate task priority order |

| rel_wai | tk_rel_wai | Release wait status |

| sus_tsk | tk_sus_tsk | Move to forced wait status |

| rsm_tsk | tk_rsm_tsk | Release forced wait status |

| frsm_tsk | tk_frsm_tsk | Force release forced wait status |

| tslp_tsk | tk_slp_tsk | Move to wait wakeup (timeout) |

| wup_tsk | tk_wup_tsk | Wake up task |

| iwup_tsk | tk_wup_tsk | Wake up task |

| set_flg | tk_set_flg | Set event flag |

| clr_flg | tk_clr_flg | Clear event flag |

| snd_msg | tk_snd_mbx | Send to mailbox |

| isnd_msg | tk_snd_mbx | Send to mailbox |

| tsnd_mbf | tk_snd_mbf | Send to message buffer (timeout) |

| ret_int | return | Return from interrupt handler |

| rel_blk | tk_rel_mpl | Return variable length memory block |

| rel_blf | tk_rel_mpf | Return fixed length memory block |

| dly_tsk | tk_dly_tsk | Delay task |

| ret_tmr | return | Return from timer handler |

|

||

|

|

|

| ref_tsk | tk_ref_tsk | Reference task status |

| slp_tsk | tk_slp_tsk | Move to wait wakeup status |

| sig_sem | tk_sig_sem | Return semaphore |

| isig_sem | tk_sig_sem | Return semaphore |

| wai_sem | tk_wai_sem | Get semaphore |

| preq_sem | tk_wai_sem | Get semaphore (polling) |

| twai_sem | tk_wai_sem | Get semaphore (timeout) |

| ref_sem | tk_ref_sem | Reference semaphore status |

| wai_flg | tk_wai_flg | Wait event flag |

| pol_flg | tk_wai_flg | Wait event flag (polling) |

| twai_flg | tk_wai_flg | Wait event flag (timeout) |

| ref_flg | tk_ref_flg | Reference event flag status |

| rcv_msg | tk_rcv_mbx | Receive from mailbox |

| prcv_msg | tk_rcv_mbx | Receive from mailbox (polling) |

| trcv_msg | tk_rcv_mbx | Receive from mailbox (timeout) |

| ref_mbx | tk_ref_mbx | Reference mailbox status |

| snd_mbf | tk_snd_mbf | Send to message buffer |

| psnd_mbf | tk_snd_mbf | Send to message buffer (polling) |

| ref_mbf | tk_ref_mbf | Reference message buffer status |

| get_blk | tk_get_mpl | Get variable length memory block |

| pget_blk | tk_get_mpl | Get variable length memory block (polling) |

| tget_blk | tk_get_mpl | Get variable length memory block (timeout) |

| ref_mpl | tk_ref_mpl | Reference variable length memory pool status |

| get_blf | tk_get_mpf | Get fixed length memory block |

| pget_blf | tk_get_mpf | Get fixed length memory block (polling) |

| tget_blf | tk_get_mpf | Get fixed length memory block (timeout) |

| ref_mpf | tk_get_mpf | Reference the fixed length memory pool status |

| set_tim | tk_set_tim | Set the system time |

| get_tim | tk_get_tim | Reference the system time |

| ref_cyc | tk_ref_cyc | Reference the cyclic startup handler status |

| ref_alm | tk_ref_alm | Reference alarm handler status |

|

|

||

|

|

|

| get_tid | tk_get_tid | Get self task ID |

| can_wup | tk_can_wup | Clear wakeup request |

| rcv_mbf | tk_rcv_mbf | Receive from message buffer |

| prcv_mbf | tk_rcv_mbf | Receive from message buffer (polling) |

| trcv_mbf | tk_rcv_mbf | Receive from message buffer (timeout) |

| loc_cpu |

|

Forbid interrupt dispatch |

| unl_cpu |

|

Allow interrupt dispatch |

| dis_int | DI | Forbid interrupt |

| ena_int | EI | Allow interrupt |

| chg_ilv |

|

Change interrupt level |

| def_cyc |

|

Cyclic startup handler registration |

| act_cyc |

|

Cyclic startup handler control |

| def_alm |

|

Register alarm handler |

| get_var | tk_get_var | Get version information |

|

|

||

|

|

|

| chg_ims | - | Change interrupt mask |

| ref_ims | - | Reference interrupt mask |

| ref_ilv | - | Reference interrupt level |

| ref_sys | tk_ref_sys (argument contents are different) | Reference system status |

____________________

Migration Guide from ITRON to T-Kernel Version 1.00

| § |

|

| § |

|

| § |

|

| § |

|

| § | The name of manufactured goods of each company in this material are the trademarks or registered trademarks of each company. |

| The copyrights to this book are as follows: | |

|

|

| Version 1.00 First printing and issue: | May 12, 2004 |

| Version 1.00 Second printing and issue: | May 31, 2004 |

| Publisher |

|

|

Copyright © 2004 TRON Association

Copyright © 2004 YRP Ubiquitous Networking Laboratory