Today, as a result of the widespread adoption of the Internet across the globe, it has become common sense that all computers should be linked together into two types of networks--a local area network (LAN) and a global, or wide area, network (WAN). Just as humans work more efficiently in groups--and in groups are capable of doing things that an individual human could not do--computers work better in groups and can do things that a single computer cannot easily accomplish. For example, in networks, the unused computing cycles of personal computers that are available when the machines are doing low-powered tasks such as word processing can be harvested in the background and used to solve, or attempt to solve, mathematical problems that previously would have required a super computer to do in standalone fashion. In fact, this distributed processing scheme was used to crack a widely used algorithm for data encryption, and the Search for Extraterrestrial Intelligence (SETI) project uses it to try to locate intelligent beings in other parts of the universe.

However, linking computers together wasn't always that obvious. The first personal computers were single-user, single-task machines--much like the original batch processing mainframes they were based on--even though a multiuser, multitask operating system existed for use on 8-bit microprocessors. As noted in the introduction to the BTRON subarchitecture, OS-9, a multiuser, multitask operating system for Motorola Corporation's 8-bit 6809 microprocessor, was available early on in the personal computer revolution--1980 to be exact. If Motorola Corporation rather than IBM Corporation had had the courage to put out the first "mainstream" personal computer, computing history might have been different. A few years later, Motorola microprocessor customers Apple Computer Inc. and Sun Microsystems Inc. caught on to the importance of networking. This was because they based their designs on workstations rather than mainframes. Thus it eventually would become common sense in the U.S. that personal computing devices should be linked together.

Enter the TRON Project with a new idea--everything in the human environment should be computerized and linked together into a "highly functional distributed system" (HFDS) for the comfort and convenience of humans. This idea and a new "real-time total computer architecture" based on it was proposed by Dr. Ken Sakamura of the University of Tokyo in 1982. At the time, it was considered a radical, if not goofy, proposal. Only a year earlier, IBM and Microsoft Corporation unveiled the IBM-PC, a very primitive single-user, single-task personal computer that lacked a window system, a mouse, a sound card, and, of course, a network interface. Since this personal computer was where it was at in computing, and more importantly, since America was the place from which new computer systems ideas were supposed to emanate, abuse was heaped upon Dr. Sakamura and his radical computer architecture, which was also to be open and royalty free. Here's what some western critics of the HFDS concept said in a 1989 USENET discussion when asked why the TRON Project was drawing little interest.

|

What I wonder about is this: what would its primary application be? Sure, it's a nice experiment in massive networking, but why is it necessary or even desirable to link all computers together? For example, I don't see any benefit to the computer in my refrigerator being networked to UUNET or anywhere else (except possibly a refrigerator repair center, but then that link would only be needed in case of malfunction). Sorry if these questions seem dumb, but I just have trouble visualizing a society that would want something like this. It sounds like a good concept (computer networking) going overboard. ____________________ In addition, the stated plan [according to a CNN news clip on the TRON Project] was to start out with a sample house and office building, work up to a city--Big Brother watches each toilet flush and reacts accordingly--then to a province (Chiba?), then to all Japan, then to all the world. While this is probably a good warm up for building a large multigeneration spaceship, the vision of a vast number of Z80s communicating with some Fifth Generation Supercomputer with the big payoff being that my heating and airconditioning might be 5 percent more efficient (somehow having a few more thermostats didn't seem to occur to them) does not seem all that interesting. [1] ____________________ One aspect of this, the communication between the processors, has me worried somewhat. When I read some reports on TRON, which are not handy at the moment, I noticed that the intent was for all processors on the TRON network to have the ability to communicate. The "smartness" intended for the TRON network could be subverted to governmental or criminal misuse very easily. Remember, just using a credit card in stores today with card readers could let someone (with intents other than making sure that your credit limit hasn't been exceeded) know where you are and what you're doing. |

This is a small sampling of comments from westerners, who viewed the TRON Project as pursuing strange goals for unjustifiable ends and/or posing a potential threat to human privacy and liberty. What has to be said here in regard to the first issue--and this is something that should be taught to all fourth year computer science majors about to enter industry--is that "different societies use technologies differently, and they have different concerns accordingly." For example, intelligent houses seem like a waste of money until you start to think of them as "devices for aiding the elderly." In Japan today, there are nearly 20,000 people aged 100 or above, and those 75 years or older have recently passed the 10 million mark. To make matters worse, the number of elderly continues to increase, while the number young people continues to decline. Thus there is neither the money nor the manpower to take care of all these elderly people, and that's why developing intelligent houses with features to assist the elderly and the disabled makes perfect sense in Japan.

As to threats to privacy and liberty, this is something that can be, and has been, accomplished without the aid of computer technology, as both far right wing and far left wing societies in recent human history have proved. In such societies, everyone spied on everyone else, and huge paper dossiers filled up miles of shelf space with the results. In fact, the spying was so pervasive that even members of the same family spied on each other for the domestic surveillance services. Moreover, the comings and goings of people were tracked with an internal passport system, and telecommunications were monitored by severely limiting the number of telephones. Photocopiers and facsimile machines were kept out the hands of the people to prevent dissent from spreading. In order to insure that people listened only to government propaganda, radios and televisions were sold that could only tune into government radio and television stations. On and on the list goes of simple measures that can be instituted to allow even a very poor country to create a police state par excellence.

Conversely, technology can be as much of a threat to police state authorities as it is to the populations they rule over. For example, it is said that one of the key elements in deposing the authoritarian government of the former Shah of Iran was the humble cassette recorder, which was used to copy and play back recorded speeches made by his political and ideological rival, Ayatollah Ruhollah Khomeni. In the U.S., the camcorder coupled with distribution of recorded contents via the Internet has been used with similar devastating impact against the central government. For example, when there were mass protests in the U.S. prior to Gulf War II, the corporate controlled mass media played down the large numbers demonstrators, but the demonstrators filmed themselves and proved via the Internet that hundreds of thousands of people turned out to protest the government's policies. This has led to some alternative news sites on the Internet gaining more credibility than the mainstream news media outlets, and as a result some of their Web sites receive millions of hits per month.

So with these three points established--that different societies use technology differently for different reasons, that you can create a police state without any advanced technology at all, and that technology can be as dangerous to the ruling classes as it can be to the masses--let us take a look at the MTRON subarchitecture of the TRON total architecture.

If a person who knew nothing about the TRON Project asked a person who knew a little about it what it's all about, the latter might reply with a simple response: "massive networking." Actually, what the TRON Project is about is creating a total architecture for a scalable, "highly functional distributed computing system" that will enable "universal computing." Merely connecting large numbers of workstations and personal computers together doesn't make a highly functional distributed computing system; all it makes is a vast data sharing system, which is an apt description of the Internet. Moreover, even this vast data sharing network lacks universality, since most of the information on it is in European languages. What if the person searching for information can't read anything due to the fact that he/she only speaks a minority language or has a visual impairment that prevents him/her from reading anything on a personal computer screen? More importantly, what if that visually impaired person needs help getting home and/or help doing things inside his/her home?

With issues of this type in mind, the TRON Project has since its inception has called for personal computers to be equipped with true multilingual data processing capabilities, and it has incorporated into the basic design of BTRON-based personal computerized aids for the disabled, which are collectively referred to as "Enableware." However, as noted above, one of the main goals of the project is to create computerized living and work spaces, which first and foremost must justify their existence in the service of the elderly and the disabled in Japan. Such computerized living spaces require that TRON-based networks be designed in a manner that allows them to process inputs in "real time." As a result of Internet terminals locking due to network inputs being timed out, there is no longer any need to explain why networks have to process in real time, but when Dr. Sakamura spoke to technology reporters at the Foreign Correspondents' Club of Japan in 1989, he was asked this very question by technology reporters who couldn't understand the value of real-time processing.

Since such people nowadays probably believe that the Internet is the greatest thing to hit the technological world since the transistor, they might be surprised to learn that although Dr. Sakamura likes the Internet, he is not overly impressed by it. At various symposia, he constantly criticizes the Internet for putting too much of a burden on terminals, and he also dislikes the fact that there is no way of dealing with the concept of "location" in TCP/IP-based networks, such as the Internet. It should be pointed out here that Dr. Sakamura is the person who expanded on Alan Kay's concept on the personal computer, which views them as "communication machines" that enable one to communicate with oneself and others. In the TRON Architecture, they are also communication machines for communicating with "intelligent objects," that is, computerized devices inside computerized living spaces. For that to be realized, the network architecture must be able to handle the concept of the physical location of things, which is not to mention people moving through the computerized physical spaces in which they exist.

As noted elsewhere on TRON Web, the TRON Architecture is a "total computer architecture made up of subarchitectures that are themselves divided into subsets." The main subarchitectures, which have all been implemented and commercialized, are: Industrial TRON (ITRON) for machine control, Business TRON (BTRON) for personal computers and workstations, and Central and Communications TRON (CTRON) for mainframe computers and telecommunications switching equipment. In order to enable these three to operate cooperatively with each other in an efficient manner in large-scale networks, a fourth subarchitecture, Macro TRON (MTRON), was proposed. MTRON is, in fact, the key to realizing the ultimate goal of the TRON Project, the construction of the HFDS, which is a hypernetwork of interacting computers. MTRON has been defined as a "composite, multilayered network architecture," and it has provisions for handling the concept of physical location that is necessary for controlling intelligent objects in computerized buildings. The network structure envisioned for MTRON circa 1988 can be seen in the following illustration.

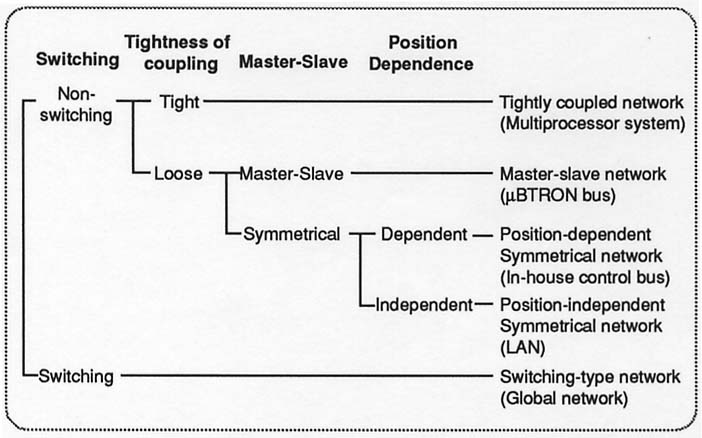

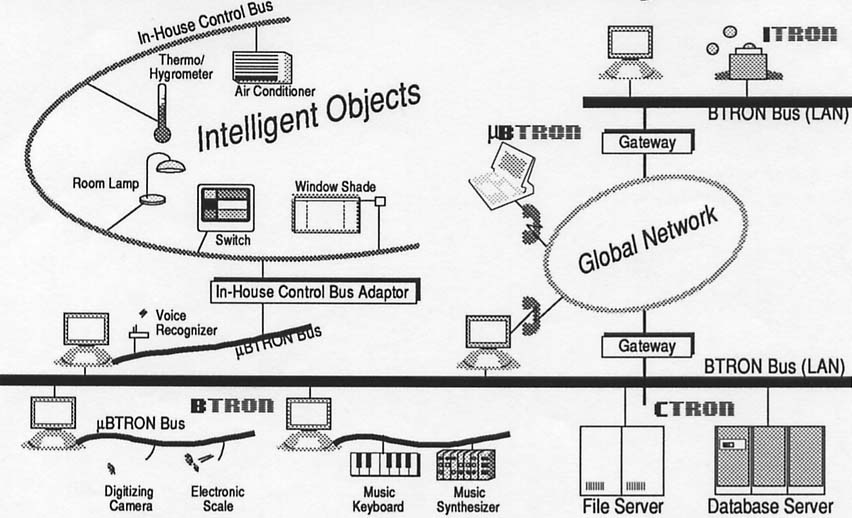

The above illustration, which appeared in the proceedings of the Fifth TRON Project Symposium, shows that the design of MTRON took into account all the known network types at the time, plus TRON-based concepts. These were broadly divided into switching and non-switching networks. Switching networks are global telephone networks, and non-switching networks are LANs, which are usually Ethernet based. The true TRON-based networks are the first three in the right-hand column, which differ from today's networks in that they are to be tightly coupled, master-to-slave, and incorporate the concept of position dependency. Since the Internet had yet to come into widespread use as a global network at the time, it was assumed that global networks would be based on the telephone system and/or private WANs. The telephone companies were transforming their networks with Integrated Services Digital Network (ISDN) technologies. However, the global network of today eventually evolved from interconnected LANs based on the TCP/IP network protocols, which were originally developed for the U.S. Department of Defense in the 1960s to interconnect mainframe computers and their LANs at various sites throughout the U.S. Here's another illustration of this network scheme.

Since the Internet has become a huge thorn in the side of governments and mainstream news media organizations wishing to "manufacture consensus," conceal facts, and spread disinformation, it seems in retrospect that the U.S. government's decision to create a worldwide global information super highway based on the Internet was one of the most self-defeating things a central government has ever done. The big question is, why? Only a person with inside information knows the true answer, but helping out U.S. industry seems to have been one of the goals. Japan was feverishly at work developing internationally agreed upon ISDN technologies, and France had already put in a nationwide teletext system called Minitel, which was originally intended to provide directory assistance without having to print lots of telephone books. Accordingly, if the world had to suddenly shift to American developed technologies for global networking, American firms would have a leg up on the competition. True, but in hindsight it is obvious that the Internet undercut the private network activities of both Microsoft Corporation and AOL Inc., and it also enabled the free software movement to gain tremendous strength, thus further weakening the profitability of U.S. technology corporations.

Whatever reasoning went into the decision to make the American-developed Internet the axis for global computer networks, the Internet is here, and it will remain with us for a long time. Interestingly, the means for making information available to others on the Internet, the hypertext scheme known as the World Wide Web, was developed at a physics laboratory in Switzerland, and eventually other countries ending up becoming more "wired" to the Internet than the U.S. At one time, Finland was the most wired country on the face of the planet, and then South Korea took the crown away from that country as the result of an ADSL boom in the late 1990s. Singapore, for better or worse, is leading the world in the GPS tracking of automobiles and other motor vehicles plus the switchover to a cashless society. As for Japan, not only was it able to shift gears from ISDN to Internet technologies, but it has also taken the lead in wireless Internet technologies and now sets the standards in that field. In addition, Japan has launched a campaign to become the most wired country in the world, which is why broadband Internet connectivity is now widely available, including 100 Mbps fiber optic line service at very low monthly rates. And France's Minitel network is still in service, since it still provides useful services. Vive la difference.

Designing and building computer networks is a lot like building highways for motor vehicles. This is because both are standards-based pathways designed to enable the efficient flow traffic. In the case of a physical highway, for example, the subsurface of the road must be prepared in a certain way, which must then be covered with either concrete or asphalt of a certain thickness and shaped so as to allow rain to run off to the sides of the road. The lanes, of course, must be a certain width, bridges that run across the highway must be of a certain height to allow large vehicles to pass under them, and road inclines must be under a certain angle to allow heavy vehicles and/or low powered vehicles to climb them. That's the hardware. The software are the rules of the roads that are taught in driver education courses, plus the signals from the various signaling equipment installed along the highway itself. And that's not to mention various recently developed computer aids that allow for hands-off driving, electronic toll collection, and car navigation systems that alert drivers to traffic congestion and suggest alternative routes.

All this is duplicated in computers networks. There are physical standards for the various types of cables and connectors that connect our computers to the LAN that connects us to the Internet. Most of these are based on open standards drawn up by the Institute of Electrical and Electronics Engineers, IEEE, which also sets standards for wireless networks. Then there are the rules of the road, called "protocols," which allow the vehicles, called "data packets," to arrive at their destination without disappearing in accidents. The basic protocols of the Internet are called Transmission Control Protocol/Internet Protocol, or TCP/IP. The hypertext data system that was constructed on top on the Internet, the World Wide Web, has its own protocols known as Hyper Text Transfer Protocol, or HTTP. The data created to be sent by HTTP are described with Hyper Text Markup Language, or HTML. There is also a network programming language known as Java, which allows small programs know as applets to be sent. Unfortunately, non-standard versions of this language exist, thus leading to frequent Java-based errors.

Since the TRON Project has planned from its inception the creation of highly functional, ubiquitous computing networks, the project has developed and/or proposed some of the most advanced network standards in the world. In the area of hardware, the project implemented the world's first open microprocessor architecture and instruction set, which included instructions to support the ITRON and BTRON subarchitectures [2]. The project also developed an advanced peripherals bus that compares favorably with today's USB2.0, the 4-Mbps µBTRON LAN bus. But where TRON standardization has really stood out is in the area of software standardization. TRON proposed a hypertext-based file system as the standard data model even before the World Wide Web had been invented. It also proposed high-level data standards for ITRON, BTRON, and CTRON systems called TRON Application Data-bus, or TAD. The real piece de resistance in networking standardization, however, was the MTRON architecture for creating the TRON Hypernetwork.

MTRON is literally the architecture of the TRON Hypernetwork, which is also known as the HFDS. It is an architecture aimed at enabling real-time communication and cooperative computing among HFDS network nodes based on the ITRON, BTRON, and CTRON subarchitectures, although other systems naturally could be connected to it through the construction of proper interfaces. This hierarchical network architecture consists of three three components: (1) the MTRON interface definition, (2) the MTRON communication protocol standard, and (3) the MTRON operating system. Since MTRON-based networks are designed to handle the concept of position dependency, which is required for constructing intelligent houses, buildings, and even cities, MTRON-based networks will be equipped with databases that list the position, identity, and functionality of each node. Moreover, in order to keep unnecessary network traffic down and thus ensure real-time responsiveness, different communication protocols will be specified for different layers of the MTRON network hierarchy.

As anyone with common sense knows, setting up something as vast as the TRON Hypernetwork will require decades. For that reason, the TRON Hypernetwork could never be based on fixed, unchangeable protocols, although it had to be designed to deal with them. In their place, it had to be based on dynamically definable protocols, which could be upgraded as construction proceeded. Thus the TRON Project from the very start has proposed the construction of MTRON-based networks using dynamically specified and programmable interface protocols written in an "executable specification language" called "TRON Universal Language System," or TULS. TULS is, in a way, the mother of all programming languages in the TRON Architecture; and, moreover, it even encompasses TAD, which is described with TULS [3]. Some technically savvy people might say that programmable interfaces are already in existence on the Internet, but they were first proposed in the TRON Project. Furthermore, one goal of the MTRON subproject, reconfiguring interfaces to balance loads, has yet to be achieved.

A language based on TULS is TRON Application Control-flow Language, or TACL. TACL is an interface programming language aimed at the BTRON-specification computer that is designed to serve as human-machine interface in the TRON Hypernetwork. This language is technically referred to as a "macro language," that is, a language that executes macros, which are short sequences of instructions that can be used to perform various tasks. These typeless macros, which have a name, an argument, and a macrobody, are stored both locally in volatile dictionaries and permanently in real objects, which are one and the same as the real objects in the BTRON real object/virtual object file system. TACL is interactive language that does not distinguish between data and programs, and certain parts of it can be compiled. Since both TAD and TACL are defined within TULS, a TACL macro, not surprisingly, can de defined anywhere in TAD. TACL handles user generated events as macro invocation, and the input argument to a macro can be obtain from data, such as a text object.

Although TULS and TACL have yet to be fully implemented, the TRON Project has not been idle in the area of computer language development. The Java language developed by Sun Microsystems was implemented on top of the ITRON real-time kernel to speed it up, and the resulting combination has become a hit product widely used in cell-phones in Japan. Not only is Java on ITRON faster than other Java implementations, but it also starts up much more quickly than versions used on personal computers. It wouldn't be an exaggeration to say that JTRON is the network programming language of cell-phones in Japan. As for personal computers, Personal Media Corporation has implemented the MicroScript visual programming language for its commercialization of the BTRON3-specification operating system, which is called Cho Kanji. This language is fairly easy to use, and as a result, there is talk of creating a Japanized version of it to teaching programming skills to school children, who will probably use it on a new networkable educational computing terminal developed by PIN CHANGE Co., Ltd.

When TRON Architecture designer Dr. Ken Sakamura first proposed the MTRON architecture, there were no unregulated global computer networks in existence. The idea of commerce via global networks was a far off dream, and computer viruses spread slowly through infected floppies. All of that changed when the Internet was opened up to the general public in the middle of the 1990s. Although the Internet made possible all sorts of new of applications--that eventually led to the dot-com investment disaster in the U.S.--it also brought along with it lots of new dangers, which is not to mention annoyances, such as unsolicited e-mail advertisements, commonly referred to as "spam." But, being the good designer that he is, Dr. Sakamura rose to the challenge and made new proposals that will enable e-commerce via the World Wide Web and the Internet, plus other types of networks. He also proposed a new architecture to allow for the creation of standardized network nodes, and he launched a new project to allow for the easy creation of ubiquitous computing environments, which is the ultimate goal of the TRON Project.

To be more specific, Dr. Sakamura first proposed the eTRON architecture, which can be used both for e-commerce and securing data on personal computer systems and servers. The name eTRON stands for "Entity TRON," and it can be used for both securing e-commerce data and data on home computers. The eTRON architecture, which has already been commercialized, is designed to span multiple types of commerce networks, work with eTRON-based servers via the Internet, and handle public key encryption. However, it specifies no particular encryption algorithms, since these are always changing in accordance with network security threats. This new architecture, believe it or not, has already been tested at several venues in Japan, such as the University of Tokyo's Digital Museum and various science exhibitions in Japan. It has received very good reviews, and thus support from Japanese hardware manufacturers. It is expected that eTRON will enable the establishment of e-commerce in Japan at an early stage, particularly via Internet-capable cell-phones that almost everyone seems to carry.

Although TRON VLSI CPU-specification microprocessors were not a commercial success, Dr. Sakamura did not give up on hardware standardization, which has been a crucial component of the TRON movement from the start. He proposed a completely new architecture for embedded devices called T-Engine, which true to the TRON design philosophy consists of subsets. T-Engine itself is aimed at embedded devices that include a graphical user interface (GUI), such as PDAs and cell-phones. The implementations to date are actually full personal computer systems that can rest in the palm of your hand, and they allow for proprietary microprocessor and bus architectures to be used. For devices that require a lesser GUI, there is µT-Engine, and for simple appliances and non-electronic devices there are nanoT-Engine, or nT-Engine, and picoT-Engine, or pT-Engine. The TRON Project's great success in the embedded field and the growing popularity of open, royalty free systems have led to a large number of domestic and foreign technology companies joining the T-Engine Forum.

Another important technology for creating ubiquitous networks is the ubiquitous ID, or uID, which is now a main focus of research and development in the TRON Project. As everyone knows, computer systems can only process data as long as every datum has a number. The uID takes this concept and applies it to everything in the physical environment. While the use of radio frequency identification chips, or RFIDs, embedded with uIDs in commercial supply chains is the most talked application thing these days, numbering everything in the human environment offers many new possibilities heretofore unimaginable. Robots, for example, would be able to "know" the types and sizes of objects in their immediate environment, thus alleviating the new for complicated artificial programs. The sorting of trash also will be rationalized as the result of using RFIDs with uIDs, and that too could be done with specialized robots. And overseas travelers will not have to worry about pronouncing difficult romanization systems, since signs with have the ability to talk to people in multiple languages.

No one has ever developed the types of networks that the TRON Project envisions, which are real-time LANs that interface seamlessly with real-time WANs, so it is impossible to describe exactly how they will be built. However, in designing such WANs, common sense tells us that in order to ensure real-time throughput during surges, average throughput is going to have to be kept to 70 or 80 percent of maximum bandwidth. Since it is impossible to expand the physical infrastructure of networks endlessly, the answer would seem to be to limit data amounts passing through the networks. And that leads us to ask what types of data take up the most bandwidth. The answer, of course, is complex graphics and audio-visual data. For something like video-on-demand, which uses a lot of bandwidth, one could easily see parallel networks coming into existence, since this is a pay-per-view service and quality of service is important to customers. By using a sever-to-server satellite link, for example, a lot of WAN bottlenecks could be avoided, and thus preserve real-time throughput of other data on the WANs.

One thing is for certain--network design has a long way to go before we will finally see the age of ubiquitous computing. Sun Microsystems is fond of saying that "the network is the computer," but network design is nothing like computer design. It is pretty obvious that the Internet has been developed in a helter skelter manner, which means that there are bottlenecks all over its topography. Accordingly, in the future, network design is going to have to be taken more seriously, its designers are going to have to develop better methods to monitor and regulate its traffic, and new means of measuring throughput are going to have to be created. In the past, we used to measure computing in terms of millions of instructions per second, or millions of floating point operations per second. However, in the future, we are probably going to be more concerned with LAN node interactions per millisecond and/or WAN server data swaps per microsecond. For those who like designing computer systems, this is really something to sink your teeth into. It's literally all about controlling chaos at the speed of light.

____________________

[1] What the person who posted this opinion is talking about is a small computer calling up the resources of a large computer to perform a function that it cannot perform, in this case calculating the optimum air flow and heating or cooling in a home. Today, this is called "grid computing," since like an "electrical grid" resources can be redirected to anywhere they are needed. Interestingly, grid computing is the next "great idea" in networking that is about to be realized in Europe and the U.S., but when it was proposed 15 years ago by Dr. Sakamura, it was ridiculed as "not interesting." If you want to do something new, this is what you're up against; and if you want to succeed at something new, this is what you must ignore.

[2] Certain TRON Project critics believe that the TRON VLSI CPU architecture was a bad architecture, since it was a CISC-based designed. According to these individuals, Dr. Sakamura mistakenly developed a CISC-based microprocessor, because he had not heard of the RISC designs being developed in the U.S. In fact, as a member of the IEEE, Dr. Sakamura was well aware of what was going on in the U.S. He chose a CISC-based design, because no RISC-based design at the time was capable of good performance in both embedded systems and high-end applications, such as workstations and servers. An added advantage of a CISC-based design is that the object code of programs is very small, which is a highly welcome feature if you plan to send programs around networks for temporary use by end users at network terminals.

[3] There are people in the Unicode camp who are of the opinion that the TRON Project is stubbornly sticking with TRON Code and the TRON Multilingual Environment for emotional reasons--being spoiled sports, as it were, for not having enough political influence to get its way at the ISO 10646 technical committee. Actually, in addition to the various technical and cultural reasons outlined elsewhere on TRON Web, another reason for rejecting Unicode is that both TRON Code and the TRON Multilingual Environment are parts of high-level data standardization defined in TAD. That is to say, they are both integral parts of the TRON Architecture, and thus neither can be discarded.

Sakamura, Ken. "TULS: TRON Universal Language System." TRON Project 1988 Open-Architecture Computer Systems: Proceedings of the Fifth TRON Project Symposium. Tokyo: Springer-Verlag, 1988, pp. 3-19.

Sakamura, Ken. "Design of MTRON: Construction of the HFDS." TRON Project 1988 Open-Architecture Computer Systems: Proceedings of the Fifth TRON Project Symposium. Tokyo: Springer-Verlag, 1988, pp. 21-32.

Sakamura, Ken. "TACL: TRON Application Control-Flow Language." TRON Project 1988 Open-Architecture Computer Systems: Proceedings of the Fifth TRON Project Symposium. Tokyo: Springer-Verlag, 1988, pp. 80-92.

Sakamura, Ken. "Programmable Interface Design in HFDS." TRON Project 1990 Open-Architecture Computer Systems: Proceedings of the Fifth TRON Project Symposium. Tokyo: Springer-Verlag, 1990, pp. 3-22.